Excitement around modern generative machine learning, often termed artificial intelligence (AI) has recently reached peak levels. Easily applied tools show us cases that are both compelling and convenient for producing dazzling images, prosaic text, and even distinctive audio. One such model is ChatGPT, an AI chatbot that is trained to follow an instruction in a prompt and provide a detailed text-based response. While the quality and accuracy can vary, ChatGPT capabilities are diverse and include attempting to provide information on a given topic, write poetry, or proofread text.

As such, perhaps it is not surprising that many are even beginning to view these applications as heralds of a truly generalised silicon-based intelligence, although the arguments against this interpretation remain very strong. Yet, if these ambitions are achieved, does this mean that humanity's most trusted intelligence system – the brain – may soon be left behind?

In short, it seems incredibly improbable. Just because generative AI can produce compelling products from large amounts of data, that should not (necessarily) be confused with generalised intelligence. Rather they are useful – even inspiring – tools for a particular range of purposes. Even should the most extravagant goals proposed by AI engineers come true, these systems will still lack key characteristics that provide biological systems a clear superiority for other certain functions; arguably generalised intelligence are among these functions.

To begin with, training GPT models is incredibly power consumptive. Training ChatGPT's previous underlying language model, GPT-3, consumed an estimated 1287 MWh of energy. That's enough to power a small city for a month and newer models will require more. Once built, these models continue to require, at best, similar high energy consumption and are limited in their flexibility for dealing with information flows outside their training environments, being unable to adapt well or learn novel tasks effectively in real-time.

Instead, AI learning takes huge amounts of data and many trials – a process that has far more human involvement than is commonly portrayed. The combination of these limitations likely precludes the ability of these systems to be 'real-time general intelligences'.

However, biological intelligence systems, such as the brain, have none of these limitations, rather the opposite. Even simple biological neural systems – such as found in bees – can allow extremely rapid learning, navigate complex and changing environments, doing so by consuming just 'glorified sugar water'. Of course, this is not to say that we would be better off with a hive instead of laptops. Yet, it does suggest perhaps we shouldn't be looking to silicon for the source of all types of intelligences. After all, the possibilities of realising the full potential of a synthetic biological intelligence are just as exciting as artificial ones, and almost certainly less risky.

For this reason, a growing number of teams across the world are exploring the idea of harnessing the inherent information-processing potential found in neural systems. Our team, Cortical Labs, Melbourne, Australia, recently demonstrated that cortical cells in a dish could learn to get significantly better at a simplified version of the classic arcade game, Pong (see BioNews 1163).

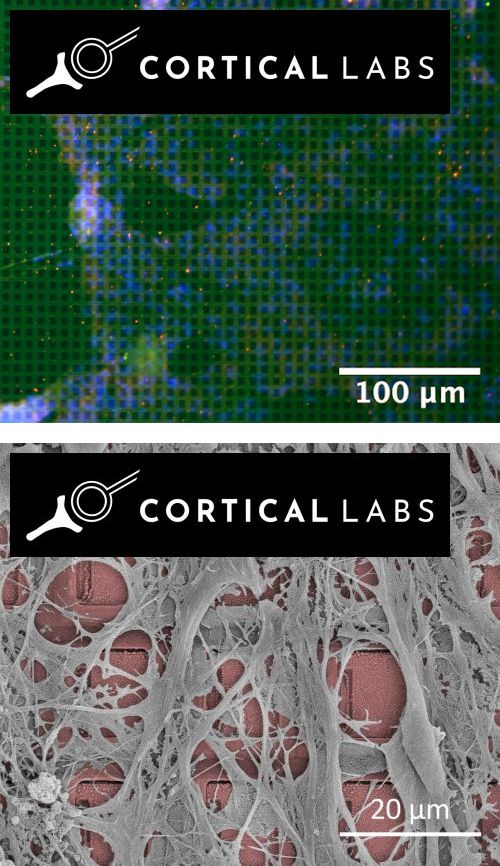

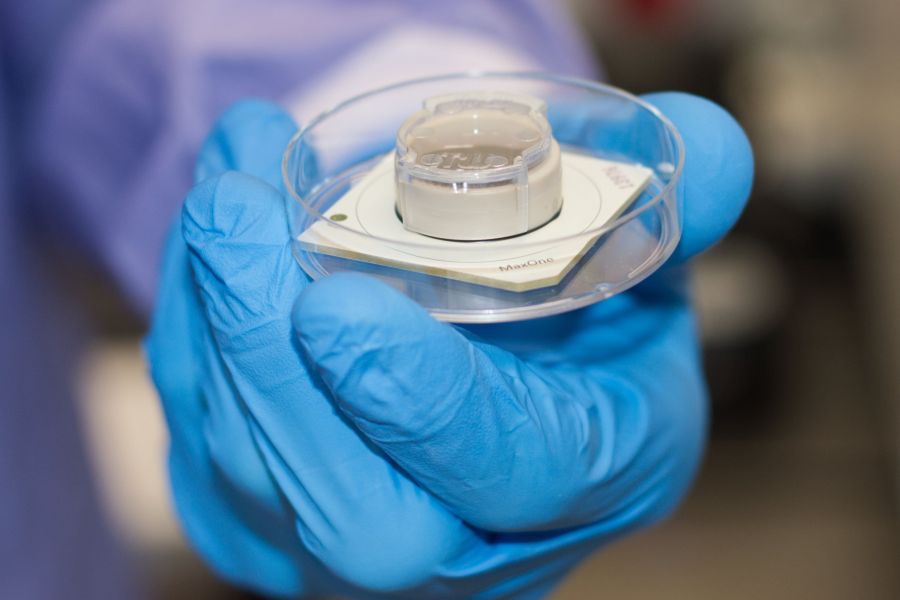

As both silicon computing and brain cells communicate via electricity, we cultured neural cells on a special chip, called a multielectrode array, which acted as a bridge between these two worlds. By providing small electrical signals to the cells, we could convey information about where a simulated ball was relative to the simulated paddle in this virtual game-world, then by reading similar signals, we could see how the brain cells responded.

By creating an extremely quick loop between reading the activity of the cells, applying it to the game, then sending the cells updated information on how their activity changed the world, along with feedback, we could 'embody' these cells in this virtual world. We found compelling evidence that it was possible to create a system where the cells would improve their performance when provided with the correct feedback.

While this system is still incredibly basic, more comparable to the first transistor than even the first circuit board, there are exciting implications. We found that not only do these systems show learning rates faster than machine learning when matched on samples presented, they also do so in a way that shows a network wide reorganisation that may inform us about how brains process information. Yet, perhaps most importantly, this proof of concept shows that these systems can be developed in an ethical and sustainable way.

Recent advancements in hardware and software certainly allow for more complex environments to be generated. However, it is the chance to use induced pluripotent stem cells (iPSCs) that provide the most compelling boost to viability. Generating iPSCs does not require the destruction of embryos, dead humans or animals, or even any significant risk of harm to donors. Instead, these cells can be generated from any volunteer by taking either a small skin or blood sample. These iPSCs are then massively expandable and renewable, meaning no further donations are necessary.

Not only does this mean that synthetic biological intelligence is a scalable technology, but it is also one that can help people in the short term. Already, scientists are developing cell lines from single persons, with the goal to find out how the individual may respond to a given treatment or disease in order to develop personalised medicine based on their specific genetics. If we can investigate how intelligence arises in neural cells grown from iPSCs, it may be possible to better understand how we can help people with that genotype should they develop a disease that impacts cognition.

Naturally, despite the promises of this new technology, it is also important to ensure that advancements are developed and applied responsibly. For this reason, we – along with others – have ensured that this type of research involves an integrated ethics component, working with independent ethicists and philosophers from around the world. Ultimately, even this approach emphasises the differences between the search for artificial intelligence compared to attempting to culture a synthetic biological intelligence in a dish. Currently, there is no evidence that generalised intelligence can occur in a purely artificial silicon set-up. Meanwhile, humans (and perhaps all living creatures to some extent) are living proof that biology can give rise to generalised intelligence. It becomes a matter not of 'if', but 'how'.

Therefore, we need to take into consideration all relevant aspects, while also acknowledging that we need not be limited to only recreating nature. Just because we use biological material such as brain cells, does not mean we need to grow a human brain exactly, nor would we necessarily want to. Rather there exists the far more attractive possibility to create a system that brings the biological advantages of low power, rapid and flexible learning, without the disadvantages or ethical concerns of having a system that could suffer or have conscious awareness. While such systems should not be viewed as the solution to all problems, much like with ChatGPT or potential AI, they do offer new tools to supplement our existing repertories. After all, isn’t building tools to make our lives as humans easier what intelligence is all about?

Below: Neurons on multielectrode arrays.

Leave a Reply

You must be logged in to post a comment.